Groundbreaking new storage technologies like NVMe and 3D Xpoint disrupt data storing practices as we know them. With higher-capacity storage media and lightning-fast read/write speeds enabled by technologies like NVMe, enterprises have a host of new scalable storage tiering options at their disposal.

Optimizing storage protocols at the application layer means navigating the complex new ecosystem borne out of these technologies. Solutions providers and ISVs must, therefore, rapidly attain competency with these new storage technologies in order to meet the demands of their market. Partnering with a value-added system integrator can make the learning curve flatter while reducing the potential risks associated with global enterprise application deployment.

This is part III of our analysis of the changing landscape of storage technology. Part I covered the new technologies and how their capabilities expand on past options. Part II discussed the ways in which enterprise needs to adapt to best use these new technologies along with how solutions providers must adapt in turn.

This concluding part will reveal the role partnerships with a value-added system integrator can play in increasing time to market while reducing development hurdles. By using hardware-based or virtualized appliance builds and the latest standards in hardware interfacing, system integration partners can offer solutions providers these benefits while improving the foundation of their product line as a whole.

What New Storage Tiering Options Mean for Solution Developers

Technology providers who supply enterprises with the applications they need have faced increasingly complex ecosystems in recent years. Among other concerns, virtualized solutions like NFV and shared computing between cloud-based and on-site systems spell many possible issues for these developers, including:

- Performance bottlenecks

- Security vulnerabilities

- Latency

- Data usage optimization

In regard to the last two problems, the aforementioned storage disruptions present a significant challenge to developers and ISVs. Technologies like NVMe are only utilized to their full potential when solutions can physically comply with their unique demands.

Should an application fail to make the most of such protocols, it can force its potential users to consider the opportunity costs. Few enterprises want to buy a solution that unnecessarily slows down and complicates their network-based operations. Instead, they will look to products that can reduce friction between critical functions while optimizing performance.

In terms of storage tiering, these priorities mean that developers must consider the needs of their target market when creating solutions builds. New storage options mean that the way those solutions read, write, and transfer data must be compliant with the latest standards and expectations.

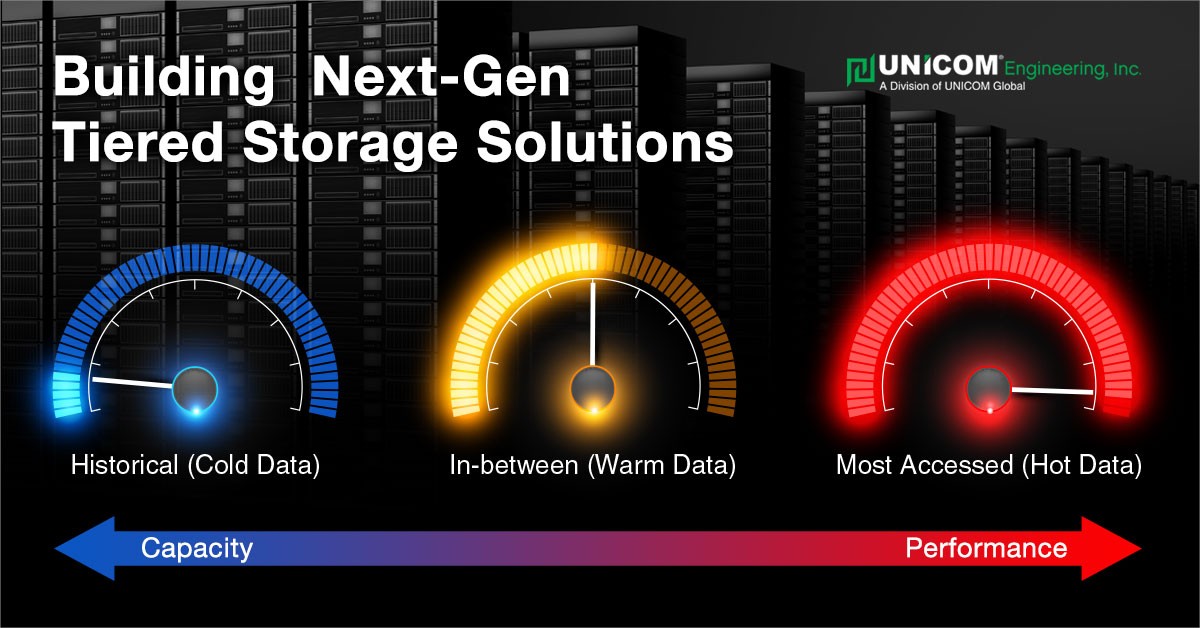

For instance, an application monitoring network traffic in a data center and performing analytics will capture huge volumes of data at a time. Captured data often needs to be kept for long periods of time, but the older it becomes, the less often it is accessed. The most accessed, most recent data (aka hot data) often requires low latency, and perforce characteristics to feed real-time reporting, analytics, and rules implementation. Older historical data (aka cold data) may need to be kept available for weeks, months, or years for reporting and pattern matching; but does not require the low latency, high perforce storage required for real-time analytics.

If the appliance only accesses cold data when needed, should older data be kept on more affordable HDD arrays? Should hot data be kept on 3D Xpoint NVMe storage for the lowest possible latency allowing real-time analytics to determine possible threat profiles? What about “in-between” data (aka warm data) that is still accessed on a frequent, regular basis, but not by services that require the fastest possible performance? This is fast becoming an area where NAND-based SSDs are filling the price/performance gap.

Questions like these concern critical software decisions, but they also involve hardware configuration decisions in order to make the best use of available options. For this reason, UNICOM Engineering recommends releasing applications within hardware or virtualized appliances rather than as a stand-alone software product.

How Appliance Builds Improve Applications Performance and UX

Through the help of a value-added system integrator like UNICOM Engineering, your solutions can be packaged as a discrete appliance ready for rapid installation. Since the appliance build accounts for much of the product's hardware needs, has its own secure OS, and comes ready with the most in-demand interface ports, it operates stably and is practically ready for “plug and play” performance in most scenarios.

Our main goal is seamless and trouble-free operation, no matter the expected deployment environment. We also assist partners with developing a range of hardware products in order to meet customer demands at different price points or performance tiers.

In addition to our integration capabilities, UNICOM Engineering can provide:

- Design engineering consulting

- Global logistics and compliance

- Global product support

- Business analytics reporting

With these services and our expertise, your company can prepare to meet the new future of storage tiering head-on. To learn more about we can help you to navigate evolving storage technologies and bring next-gen solutions to market, contact us today.